A brief 200-year history of synesthesia

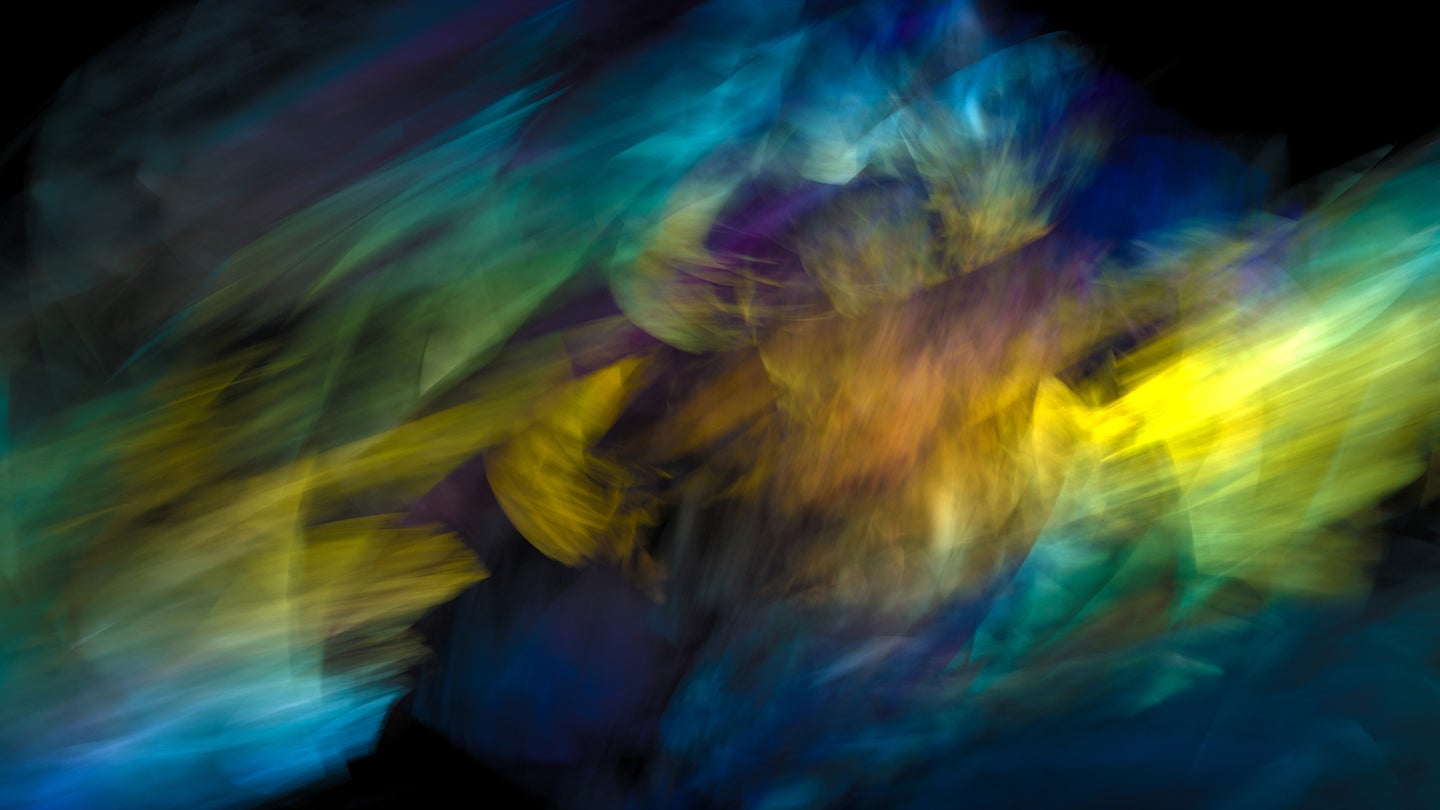

Richard Cytowic, a pioneering researcher who returned synesthesia to mainstream science, traces the historical evolution of our understanding of the phenomenon.

This article was originally featured on MIT Press.

There is no reason to think that synesthesia hasn’t existed throughout all of human history. We just don’t have adequate records to make a reliable determination. Famous thinkers such as Aristotle, Johann Wolfgang von Goethe, and Sir Isaac Newton reasoned by analogy (an accepted scientific method until the end of the 17th century) across different dimensions of perception to pair, for example, a sound frequency with a given wavelength frequency of light.

The first photograph of a synesthetic individual dates from 1872. It is of eight-year-old Ellen Emerson, daughter of Ralph Waldo Emerson. The philosopher Henry David Thoreau, a close family friend, wrote to Ellen’s father in 1845: “I was struck by Ellen’s asking me. … If I did not use ‘colored words.’ She said that she could tell the color of a great many words, and amused the children at school by doing so.”

This spare description is evidence enough that Ellen Emerson was indeed a synesthete. Colored words are a common type of synesthesia. Her assumption that others also see them that way is likewise typical. Jörg Jewanski at the University of Münster notes that “since Thoreau was ‘struck,’ we can assume it was not just the amusing game of a child.” Apparently this was the first time he had heard of such an experience, and it was “unusual to him.”

Individuals typically say they have had synesthesia as far back as they can remember.

Professor Jewanski has also unearthed the first reported clinical case of synesthesia. It is in the form of an 1812 medical dissertation, written in Latin, by Georg Tobias Ludwig Sachs. As a polymodal synesthete (someone who experiences synesthesia involving more than one sense), Sachs cited examples of his “color synesthesia for letters of the alphabet, for tones of the musical scale, for numbers, and for days of the week.” Sporadic medical reports followed, but these all concerned adults—a finding that raises the question, Where were all the synesthetic children in the 60 years leading up to the Emerson case? And why were they afterward in apparently short supply? After all, individuals typically say they have had synesthesia as far back as they can remember. Its consistent expression over time likewise points to roots in childhood.

Modern scientists have been studying synesthetic children in depth, including neonates, since 1980. Their investigations have influenced theories about how the phenomenon develops in the brain, and so the absence of childhood reports before the 20th century remains a historical puzzle. The term synesthesia did not exist between 1812 and 1848, but that explains only part of the gap. One consequence of the gap is that data sets from the large number of 19th-century statistical studies are completely unknown to all but a handful of researchers today. Contemporary investigators may possess superior methods, but they are liable to retread old ground if they are unaware that people working long ago had already posed, and sometimes answered, key questions about the phenomenon.

Interest in synesthesia accelerated after 1880 when the influential polymath Sir Francis Galton, Charles Darwin’s cousin, wrote about “visualized numerals” in the prestigious journal Nature. Three years later he noted the strong tendency for synesthesia to run in families. Steadily, the number of peer-reviewed papers increased. In the same year that Galton’s first paper appeared, the ophthalmologist F. Suarez de Mendoza published a book in French, “L’audition colorée.” Not until 1927 did a German-language book on synesthesia appear, Annelies Argelander’s “Das Farbenhören under der synästhetische Faktor der Wahrnehmung.” A book-length treatment in English would still be decades off, but suddenly the topic seemed to fill the salons of fin de siècle Europe. Composers, painters, and poets piled on, even proponents of automatic writing, spiritualism, and theosophy. The zeitgeist of the time unfortunately emphasized the idea of sensory correspondences, which overshadowed attention to synesthesia as a perceptual phenomenon. Two famous poems of the period still taught today are “Correspondances” by Charles Baudelaire and “Voyelles” by Arthur Rimbaud. Given the cultural atmosphere during this burst of romanticism, it is easy to see how synesthesia gained an iffy reputation.

To make matters worse, behaviorism came onto the scene—an inflexible ideology that regarded the observation of behavior rather than the conscious introspection of experience as the only correct way to approach psychology. Behaviorism peaked in influence between 1920 and 1940. A marked drop in scientific papers occurred during this time, only to rebound during a second renaissance in the late 1980s.

In the 19th century and earlier, introspection was a common and respected experimental technique. But then medicine began to distinguish symptoms such as pain, dizziness, or ringing in the ears as subjective states “as told by” patients, from signs like inflammation, paralysis, or a punctured eardrum that a physician could see as observable facts. This brings us full circle back to synesthesia’s fundamental lack of outward evidence that could satisfy the science of its day. Many decades after behaviorism had fallen from favor, modern science still rejected self-reports and references to mental states as unfit material for study. As a methodology, introspection was considered unreliable because it was unverifiable—again, the chasm of first-person versus third-person reports.

One reason for the persistent distrust of verbal reports is not that scientists thought people were lying about what they experienced but instead because of a remarkable discovery: All of us routinely fabricate plausible-sounding explanations that have little, if anything, to do with the actual causes of what we think, feel, and do. This must be so for reasons of energy cost, which forces the bulk of what happens in the brain to be outside consciousness.

If clinicians spoke of synesthesia at all, they spoke of vague “crossed connections” between equally ill-defined “nerve centers.”

To understand this counterintuitive arrangement it helps to think of a magician’s trick. The audience never perceives all the steps in its causal sequence—the special contraptions, fake compartments, hidden accomplices. It sees only the final effect. Likewise, the real sequence of far-flung brain events that cause a subjective experience or overt action is massively more than the sequence we consciously perceive. Yet we still explain ourselves with the shortcut “I wanted to do it, so I did it,” when the neurological reality is “My actions are determined by forces I do not understand.”

Hard as it is to imagine now, this attitude rendered all aspects of memory, inner thought, and emotion taboo for a long time. These were relegated to psychiatry and philosophy. As late as the 1970s when I trained in neurology, my interest in aphasia (a loss of language) and split-brain research got me labeled “philosophically minded” because all firsthand experience was dismissed as outside neurology’s proper purview.

The science of the day was simply not up to the task before it. For any phenomenon to be called scientific it must be real and repeatable, have a plausible mechanism explainable in terms of known laws, and have far-reaching implications that sometimes cause what Thomas Kuhn called a paradigm shift. Psychology at the time wasn’t up to the challenge either. It, too, was an immature science, jam-packed with ill-defined and untestable “associations.” It did not yet know about priming, masking, pop-out matrices with hidden figures, or any number of optical and behavioral techniques now at our disposal that show synesthesia is perceptually real. The idiosyncratic nature of the phenomenon was a major obstacle that earlier science couldn’t explain, whereas today we can account for differences among individuals in terms of neural plasticity, genetic polymorphism, and environmental factors present both in the womb and during the formative years of early childhood.

The 19th-century understanding of nervous tissue was likewise paltry compared to what we know now. If clinicians spoke of synesthesia at all, they spoke of vague “crossed connections” between equally ill-defined “nerve centers.” But such tentative ideas were neither plausible nor testable. If we didn’t understand how standard perception worked, then how could the science of the time possibly explain an outlier like synesthesia? It knew little about how fetal brains develop, the powerful role of synaptic pruning, or how interactions between genetics and environment uniquely sculpt each brain (which is why identical twins often have different temperaments). The enormous fields of signal transduction and volume transmission likewise remained undiscovered until the 1960s. Volume transmission is the conveyance of information via small molecular messengers and diffusible gases not only in the brain but also throughout the entire body. If you think of the physical wiring of axons and synapses as a train going down a track, then volume transmission is the train leaving the track. All these concepts were beyond our earlier comprehension.

Today we can test hypotheses about cross-connectivity and how neural networks establish themselves as needed, self-calibrate, and then disband. We do this through a variety of anatomical and physiological tools that range from tensor diffusion imaging to magnetoencephalography. As for synesthesia upending the status quo and causing a paradigm shift, it had to wait until orthodoxy could no longer object. By the early 2000s, the brain pictures that a critical establishment had demanded for decades were at last at hand, and in abundance. Critics were silenced, and long-standing dogmatic notions of how the brain is organized were out. The meaning of the paradigm shift lay in realizing just how consequential synesthesia is. Far from being a mere curiosity, it has proven to be a window onto an enormous expanse of mind and brain.

Since the early reports of Georg Sachs and Francis Galton, our understanding has changed immensely, particularly over the past two decades. There is every reason to expect that the framework for the why and how of synesthesia will continue to change. That is the nature of science: Answer one question, and 10 new ones arise. Science is never “settled,” as President Barack Obama falsely liked to say. Long-entrenched ideas can be overthrown by new evidence. For example, “everyone knew” since the 1800s that stomach ulcers were caused by excess acid. Standard treatment consisted of a bland diet and surgery to cut out the stomach’s eroded parts. Barry Marshall was ridiculed and dismissed by the medical establishment when he suggested in 1982 that the bacterium, Helicobacter pylori, was the true cause of ulcers. His work eventually won the 2005 Nobel Prize, and today ulcers are cured by a short course of antibiotics.

Understanding the laws behind the ability could give us an unprecedented handle on the development of language and abstract thinking.

Similarly, James Watson and Francis Crick discovered the double helix of DNA in 1953. Today, as genetics underlies all modern biology, it is astonishing that a small change in one’s DNA dramatically alters one’s perception of the world. The most profound question is why the genes for synesthesia remain so prevalent in the general population. Remember that about one in 30 people walk around with a mutation for an inwardly pleasant but apparently useless trait. It costs too much in wasted energy to hang on to superfluous biology, so evolution should have jettisoned synesthesia long ago. The fact that it didn’t means that it must be doing something of inapparently high value. Perhaps the pressure to maintain it stays high because the increased connectivity in the brain supports metaphor: seeing the similar in the dissimilar and forging connections between the two. Understanding the laws behind the ability could give us an unprecedented handle on the development of language and abstract thinking, to say nothing of creativity.

Synesthesia has already caused a paradigm shift in two senses. For science, it has forced a fundamental rethinking about how brains are organized. It is now beyond dispute that cross talk happens in all brains; synesthetes just have more of it that takes place in existing circuits.

The other paradigm shift lies within each individual. What synesthesia shows is that not everyone sees the world as you do. Not at all. Eyewitnesses famously disagree on the same “facts.” Others have different points of view than you do, and all are true. Synesthesia highlights how each brain filters the world in its own uniquely subjective way.

Richard E. Cytowic, M.D., MFA, a pioneering researcher in synesthesia, is Professor of Neurology at George Washington University. He is the author of several books, including “Synesthesia: A Union of the Senses,” “The Man Who Tasted Shapes,” “The Neurological Side of Neuropsychology,” and “Synesthesia,” from which this article is excerpted.